by Erica L. Meltzer | Apr 1, 2016 | Blog, The New SAT

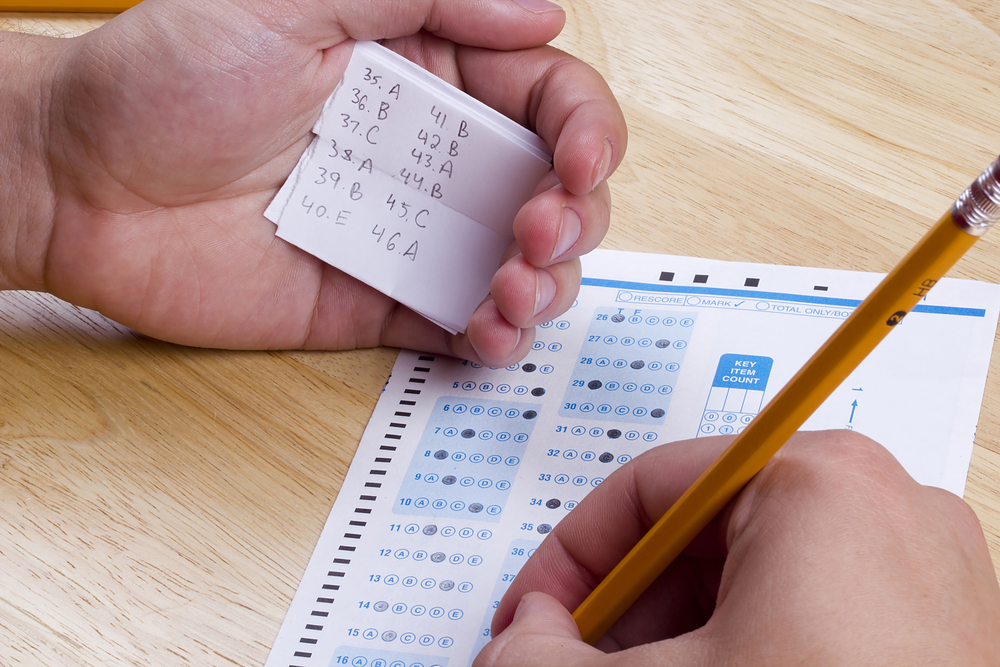

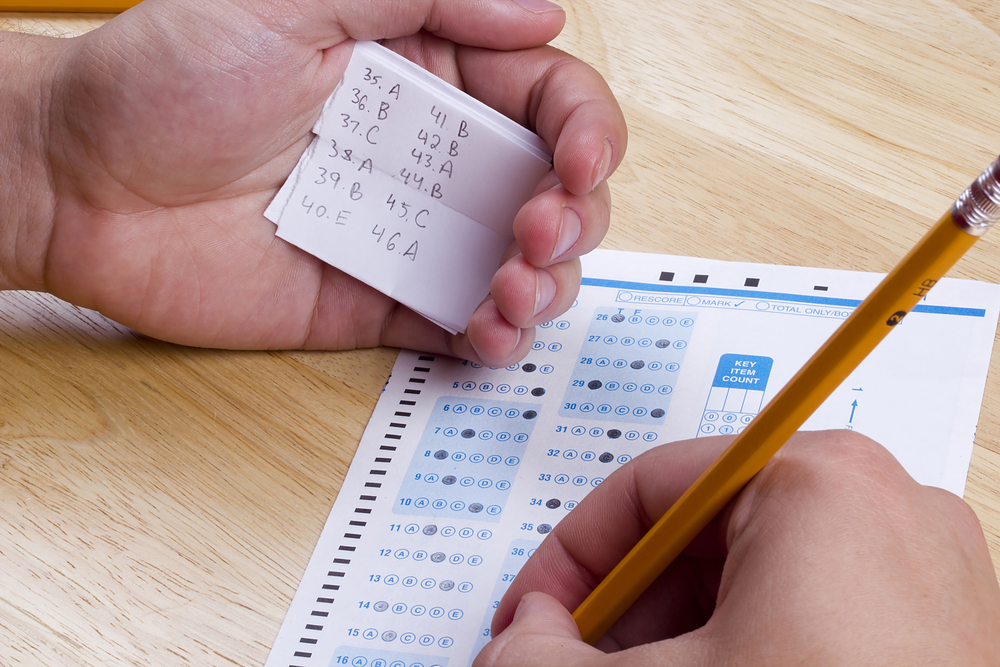

As predicted, the College Board’s decision to bar tutors from the first administration of the new SAT had little effect on the security of the test; questions from the March 5th administration of the new SAT quickly made an appearance on various Chinese websites as well as College Confidential.

Reuters has now broken a major story detailing the SAT “cartels” that have sprung up in Asia, as well as the College Board’s inconsistent and lackluster response to what is clearly a serious and widespread problem.

It’s a two-part series, and it clearly takes the College Board to task for allowing the breaches. (more…)

by Erica L. Meltzer | Mar 22, 2016 | Blog, The New SAT

Apparently I’m not the only one who has noticed something very odd about PSAT score reports. California-based Compass Education has produced a report analyzing some of the inconsistencies in this year’s scores.

The report raises more questions than it answers, but the findings themselves are very interesting. For anyone who has the time and the inclination, it’s well worth reading.

Some of the highlights include:

- Test-takers are compared to students who didn’t even take the test and may never take the test.

- In calculating percentiles, the College Board relied on an undisclosed sample method when it could have relied on scores from students who actually took the exam.

- 3% of students scored in the 99th percentile.

- In some parts of the scale, scores were raised as much as 10 percentage points between 2014 and 2015.

- More sophomores than juniors obtained top scores.

- Reading/writing benchmarks for both sophomores and juniors have been lowered by over 100 points; at the same time, the elimination of the wrong-answer penalty would permit a student to approach the benchmark while guessing randomly on every single question.

by Erica L. Meltzer | Mar 20, 2016 | Blog, The New SAT

Following the first administration of the new SAT, the College Board released a highly unscientific survey comparing 8,089 March 2016 test-takers to 6494 March 2015 test-takers.

You can read the whole thing here, but in case you don’t care to, here are some highlights:

- 75% of students said the Reading Test was the same as or easier than they expected.

- 80% of students said the vocabulary on the test would be useful to them later in life, compared with 55% in March 2015.

- 59% of students said the Math section tests the skills and knowledge needed for success in college and career.

Leaving aside the absence of some basic pieces of background information that would allow a reader to evaluate just how seriously to take this report (why were different numbers of test-takers surveyed in 2015 vs. 2016? who exactly were these students? how were they chosen for the survey? what were their socio-economic backgrounds? what sorts of high schools did they attend, and what sorts of classes did they take? what sorts of colleges did they intend to apply to? were the two groups demographically comparable? etc., etc.), this is quite a remarkable set of statements. (more…)

by Erica L. Meltzer | Feb 29, 2016 | Blog, The New SAT

This just in: earlier today I met with a tutor colleague who told me that the College Board had sent emails to at least 10 of his New York-area colleagues who were registered for the first administration of the new SAT, informing them that their registration for the March 5th exam had been transferred to the May exam. Not coincidentally, the May test will be released, whereas the March one will not.

Another tutor had his testing location moved to, get this… Miami.

I also heard from another tutor in North Carolina whose registration was also transferred to May for “security measures.” Apparently this is a national phenomenon. Incidentally, the email she received gave her no information about why her registration had been cancelled for the March test. She had to call the College Board and wait 45 minutes on hold to get even a semi-straight answer from a representative. Along with releasing test scores on time, customer service is not exactly the College Board’s strong suit. (more…)

by Erica L. Meltzer | Feb 28, 2016 | Blog, The New SAT

The Washington Post reported yesterday that the new SAT will in fact continue to include an experimental section. According to James Murphy of the Princeton Review, guest-writing in Valerie Strauss’s column, the change was announced at a meeting for test-center coordinators in Boston on February 4th.

To sum up:

The SAT has traditionally included an extra section — either Reading, Writing, or Math — that is used for research purposes only and is not scored. In the past, every student taking the exam under regular conditions (that is, without extra time) received an exam that included one of these sections. On the new SAT, however, only students not taking the test with writing (essay) will be given versions of the test that include experimental multiple-choice questions, and then only some of those students. The College Board has not made it clear what percentage will take the “experimental” version, nor has it indicated how those students will be selected.

Murphy writes:

In all the public relations the company has done for the new SAT, however, no mention has been made of an experimental section. This omission led test-prep professionals to conclude that the experimental section was dead.

He’s got that right — I certainly assumed the experimental section had been scrapped! And I spend a fair amount of time communicating with people who stay much more in the loop about the College Board’s less publicized wheelings and dealings than I do.

Murphy continues:

The College Board has not been transparent about the inclusion of this section. Even in that one place it mentions experimental questions—the counselors’ guide available for download as a PDF — you need to be familiar with the language of psychometrics to even know that what you’re actually reading is the announcement of experimental questions.

The SAT will be given in a standard testing room (to students with no testing accommodations) and consist of four components — five if the optional 50-minute Essay is taken — with each component timed separately. The timed portion of the SAT with Essay (excluding breaks) is three hours and 50 minutes. To allow for pretesting, some students taking the SAT with no Essay will take a fifth, 20-minute section. Any section of the SAT may contain both operational and pretest items.

The College Board document defines neither “operational” nor “pretest.” Nor does this paragraph make it clear whether all the experimental questions will appear only on the fifth section, at the start or end of the test, or will be dispersed throughout the exam. During the session, I asked if all the questions on the extra section won’t count and was told they would not. This paragraph is less clear on that issue, since it suggests that experimental (“pretest”) questions can show up on any section.

When The Washington Post asked for clarification on this question, they were sent the counselor’s paragraph, verbatim. Once again, the terminology was not defined and it was not clarified that “pretest” does not mean before the exam, but experimental.

For starters, I was unaware that the term “pretest” could have a second meaning. Even by the College Board’s current standards, that’s pretty brazen (although closer to the norm than not).

Second, I’m not sure how it is possible to have a standardized test that has different versions with different lengths, but one set of scores. (Although students who took the old test with accommodations did not receive an experimental section, they presumably formed a group small enough not to be statistically significant.) In order to ensure that scores are as valid as possible, it would seem reasonable to ensure that, at bare minimum, as many students as possible receive the same version of the test.

As Murphy rightly points out, issues of fatigue and pacing can have a significant effect on students’ scores — a student who takes a longer test will, almost certainly, become more tired and thus more likely to incorrectly answers questions that he or should would otherwise have gotten right.

Second, I’m no expert in statistics, but there would seem to be some problems with this method of data collection. Because the old experimental section was given to nearly all test-takers, any information gleaned from it could be assumed to hold true for the general population of test-takers.

The problem now is not simply that only one group of testers will be given experimental questions, but that the the group given experimental questions and the group not given experimental questions may not be comparable.

If you consider that the colleges requiring the Essay are, for the most part, quite selective, and that students tend not to apply to those schools unless they’re somewhere in the ballpark academically, then it stands to reason that the group sitting for the Essay will be, on the whole, a higher-scoring group than the group not sitting for the Essay.

As a result, the results obtained from the non-Essay group might not apply to test-takers across the board. Let’s say, hypothetically, that test takers in the Essay group are more likely to correctly answer a certain question than are test-takers in the non-Essay group. Because the only data obtained will be from students in the non-Essay group, the number of students answering that question correctly is lower than it would be if the entire group of test-takers were taken into account.

If the same phenomenon repeats itself for many, or even every, experimental question, and new tests are created based on the data gathered from the two unequal groups, then the entire level of the test will eventually shift down — perhaps further erasing some of the score gap, but also giving a further advantage to the stronger (and likely more privileged) group of students on future tests.

All of this is speculation, of course. It’s possible that the College Board has some way of statistically adjusting for the difference in the two groups (maybe the Hive can help with that!), but even so, you have to wonder… Wouldn’t it just have been better to create a five-part exam and give the same test to everyone?

by Erica L. Meltzer | Feb 5, 2016 | Blog, Issues in Education, The New SAT

A couple of weeks ago, I posted a guest commentary entitled “For What It’s Worth,” detailing the College Board’s attempt not simply to recapture market share from the ACT but to marginalize that company completely. I’m planning to write a fuller response to the post another time; for now, however, I’d like to focus on one point that was lurking between in the original article but that I think could stand to be made more explicit. It’s pretty apparent that the College Board is competing very hard to reestablish its traditional dominance in the testing market – that’s been clear for a while now – but what’s less apparent is that it may not be a fair fight.

I want to take a closer look at the three states that the College Board has managed to wrest away from the ACT: Michigan, Illinois, and Colorado. All three of these states had fairly longstanding relationships with the ACT, and the announcements that they would be switching to the SAT came abruptly and caught a lot of people off guard. Unsurprisingly, they’ve also engendered a fair amount of pushback. (more…)