by Erica L. Meltzer | Mar 27, 2016 | Blog

As I’ve written about recently, the fact that the SAT has been altered from a predictive, college-aligned test to a test of skills associated with high school is one of the most overlooked changes in the discussion surrounding the new exam. Along with the elimination of ETS from the test-writing process, it signals just how radically the exam has been overhauled.

Although most discussions of the rSAT refer to the fact that the test is intended to be “more aligned with what students are doing in school,” the reality is that this alignment is an almost entirely new development rather than a “bringing back” of sorts. The implication — that the SAT was once intended to be aligned with a high school curriculum but has drifted away from that goal — is essentially false. (more…)

by Erica L. Meltzer | Dec 6, 2015 | Blog, Issues in Education, The New SAT

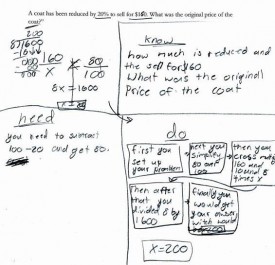

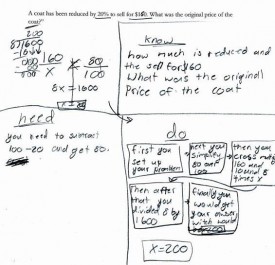

I’m not sure how I missed it when it came out, but Barry Garelick and Katherine Beals’s “Explaining Your Math: Unnecessary at Best, Encumbering at Worst,” which appeared in The Atlantic last month, is a must-read for anyone who wants to understand just how problematic some of Common Core’s assumptions about learning are, particularly as they pertain to requiring young children to explain their reasoning in writing.

(Side note: I’m not sure what’s up with the Atlantic, but they’ve at least partially redeemed themselves for the very, very factually questionable piece they recently ran about the redesigned SAT. Maybe the editors have realized how much everyone hates Common Core by this point and thought it would be in their best interest to jump on the bandwagon, but don’t think that the general public has yet drawn the connection between CC and the Coleman-run College Board?)

I’ve read some of Barry’s critiques of Common Core before, and his explanations of “rote understanding” in part provided the framework that helped me understand just what “supporting evidence” questions on the reading section of the new SAT are really about.

Barry and Katherine’s article is worth reading in its entirety, but one point that struck me as particularly salient.

Math learning is a progression from concrete to abstract…Once a particular word problem has been translated into a mathematical representation, the entirety of its mathematically relevant content is condensed onto abstract symbols, freeing working memory and unleashing the power of pure mathematics. That is, information and procedures that have been become automatic frees up working memory. With working memory less burdened, the student can focus on solving the problem at hand. Thus, requiring explanations beyond the mathematics itself distracts and diverts students away from the convenience and power of abstraction. Mandatory demonstrations of “mathematical understanding,” in other words, can impede the “doing” of actual mathematics.

Although it’s not an exact analogy, many of these points have verbal counterparts. Reading is also a progression from concrete to abstract: first, students learn that letters are represented as abstract symbols, and that those symbols correspond to specific sounds, which get combined in various ways. When students have mastered the symbol/sound relationship (decoding) and encoded them in their brains, their working memories are freed up to focus on the content of what they are reading, a switch that normally occurs around third or fourth grade.

Amazingly, Common Core does not prescribe that students compose paragraphs (or flow charts) demonstrating, for example, that they understand why c-a-t spells cat. (Actually, anyone, if you have heard of such an exercise, please let me know. I just made that up, but given some of the stories I’ve heard about what goes on in classrooms these days, I wouldn’t be surprised if someone, somewhere were actually doing that.)

What CC does, however, is a slightly higher level equivalent — namely, requiring the continual citing of textual “evidence.” As I outlined in my last couple of posts, CC, and thus the new SAT, often employs a very particular definition of “evidence.” Rather than use quotations, etc. to support their own ideas about a work or the arguments it contains (arguments that would necessarily reveal background knowledge and comprehension, or lack thereof), students are required to demonstrate their comprehension over and over again by “staying within the four corners of the text,” repeatedly returning it to cite key words and phrases that reveal its meaning — in other words, their understanding of the (presumably) self-evident principle that a text means what it means because it says what it says. As is true for math, entire approach to reading confuses demonstration of a skill with “deep” possession of that skill.

That, of course, has absolutely nothing to do with how reading works in the real world. Nobody, nobody, reads this way. Strong readers do not need to stop repeatedly in order to demonstrate that they understand what they’re reading. They do not need to point to words or phrases and announce that they mean what they mean because they mean it. Rather, they indicate their comprehension by discussing (or writing about) the content of the text, by engaging with its ideas, by questioning them, by showing how they draw on or influence the ideas of others, by pointing out subtleties other readers might miss… the list goes on and on.

Incidentally, I’ve had adults gush to me that their children/students are suddenly acquiring all sorts of higher level skills, like citing texts and using evidence, but I wonder whether they’re actually being taken in by appearances. As I mentioned in my last post, although it may seem that children being taught this way are performing a sophisticated skill (“rote understanding”), they are actually performing a very basic one. I think Barry puts it perfectly when he says that It is as if the purveyors of these practices are saying: “If we can just get them to do things that look like what we imagine a mathematician does, then they will be real mathematicians.”

In that context, these parents’/teachers’ reactions are entirely understandable: the logic of what is actually going on is so bizarre and runs so completely counter to a commonsense understanding of how the world works that such an explanation would occur to virtually no one who hadn’t spent considerable time mucking around in the CC dirt.

To get back to the my original point, though, the obsessive focus on the text itself, while certainly appropriate in some situations, ultimately serves to prohibit students from moving beyond the text, from engaging with its ideas in any substantive way. But then, I suspect that this limited, artificial type of analysis is actually the goal.

I think that what it ultimately comes down to is assessment — or rather the potential for electronic assessment. Students’ own arguments are messier, less “objective,” and more complicated, and thus more expensive, to assess. Holistic, open-ended assessment just isn’t scalable the same way that computerized multiple choice tests are, and choosing/highlighting specific lines of a text is an act that lends itself well to (cheap, automated) electronic grading. And without these convenient types of assessments, how could the education market ever truly be brought to scale?

by Erica L. Meltzer | Nov 29, 2015 | Blog, The New SAT

In my previous post, I examined the ways in which most so-called “supporting evidence” questions on the new SAT are not really about “evidence” at all, but are actually literal comprehension questions in disguise.

So to pick up where I left off, why exactly is the College Board reworking what are primarily literal comprehension questions in such an unnecessarily complicated way?

I think there are a couple of (interrelated) reasons.

One is to create an easily quantifiable way of tracking a particular “critical thinking” skill. According to the big data model of the world, things that cannot be tagged, and thus analyzed quantitatively, do not exist. (I’m tagged, therefore I am.) According to this view, the type of open-ended analytical essays that actually require students to formulate their own theses and analyze source material are less indicative of the ability to use evidence than are multiple-choice tests. Anything holistic is suspect.

The second reason – the one I want to focus on here – is to give the illusion of sophistication and “rigor.”

Let’s start with the fact that the new SAT is essentially a Common Core capstone test, and that high school ELA Common Core Standards consist pretty much exclusively of formal skills, e.g. identifying main ideas, summarizing, comparing and contrasting; specific content knowledge is virtually absent. As Bob Shepherd puts it, “Imagine a test of biology that left out almost all world knowledge about biology and covered only biology “skills” like—I don’t know—slide-staining ability.”

At the same time, though, one of the main selling points of Common Core has been that it promotes “critical thinking” skills and leads to the development of “higher-order thinking skills.”

The problem is that genuine “higher order thinking” requires actual knowledge of a subject; it’s not something that can be done in a box. But even the new SAT is being touted as a “curriculum-based test,” it can’t explicitly require any sort of pre-existing factual knowledge – at least not on the verbal side. Indeed, the College Board is very clear about insisting that no particular knowledge of (mere rote) facts is needed to do well on the test. So there we have a paradox.

To give the impression of increased rigor, then, the only solution was to create an exam that tested simple skills in inordinately convoluted ways – ways that are largely detached from how people actually read and write, and that completely miss the point of how those skills are applied in the real world.

That is, not coincidentally, exactly the same criticism that is consistently directed at Common Core as a whole, as well as all the tests associated with it (remember comedian Louis C.K.’s rant about trying to help his daughter with her homework?)

In practice, “using evidence” is not an abstract formal skill but a context-dependent one that arises out specific knowledge of a subject. What the new SAT is testing is something subtly but significantly different: whether a given piece of information is consistent with, a given claim.

But, you say, isn’t that the very definition of evidence? Well…sort of. But in the real world (or at least that branch of it not dominated by people completely uninterested in factual truth), “using evidence” isn’t simply a matter of identifying what texts say, i.e. comprehension, but rather using information, often from a variety of sources, to support an original argument. That information must not only be consistent with the claim it is used to support, but it must also be accurate.

To use evidence effectively, it is necessary to know what sources to consult and how to locate them; to be aware of the context in which those sources were produced; and to be capable of judging that validity of the information they present — all things that require a significant amount of factual knowledge.

Evidence that is consistent with a claim can also be suspect in any number of ways. It can be partially true, it can be distorted, it can be underreported, it can be exaggerated, it can be outright falsified… and so on. But there is absolutely no way to determine any of these things in the absence of contextual/background knowledge of the subject at hand.

Crucially, there is also no way to leap from practicing the formal skill of “using evidence,” as the College Board defines it, to using evidence in the real world, or at least in the way that college professors and employers will expect students/employees to use it. If you don’t know a lot about a subject, your ability to analyze – or even to fully comprehend – arguments concerning it will be limited, regardless of how much time you have spent labeling main ideas and supporting details. That is why even the most motivated students can hit the 700 wall in SAT Critical Reading, sometimes while scoring 800s in Math and Writing; there are a sufficient number of holes in their general knowledge that there’s always something they misunderstand. There is no short-term way to get around that weakness, no matter how many “main point” or “primary purpose” questions they do.

This (misc)conception of “evidence” as a strictly formal skill leads to a parody of what real-world academic inquiry actually consists of. A 16 year-old might impress her teacher by throwing around words like “discourse,” but that does not mean that her analytical abilities are in any way comparable to those of a 50-year old tenured historian with a Ph.D., a list of peer-reviewed articles, and a couple of books under her belt — not to mention a rock-solid understanding of the chronology, major players, and running debates in her particular area of specialization, as well as the ability to sit still, take notes, and listen to her colleagues speak for long stretches at a time. Yet the College Board is effectively insisting that by superficially mimicking certain aspects of the work that actual scholars do, teenagers can leapfrog over years of hard work and magically acquire adult skills. (Ever watched a high school sophomore try to complete an exercise in “historical thinking” about the Spanish conquest of the New World when she isn’t quite sure who the Amerindians were? I have, and it’s not pretty.)

In his “Common-Sense Approach to Common Core Math” series, Barry Garelick makes this point as well:

[Students] are taught to reproduce explanations that make it appear they possess understanding—and more importantly, to make such demonstrations on the standardized tests that require them to do so. And while “drill and kill” has been held in disdain by math reforms, students are essentially “drilling understanding.”

The repeated going back to the text to answer “evidence” questions serves exactly the same purpose; it gives the appearance that students are performing a sophisticated skill when in fact they’re doing nothing of the sort. The underlying issue, namely that students might not actually understand what they read because of deficiencies in vocabulary and background knowledge, is conveniently sidestepped.

I suspect that the College Board’s “skills and knowledge” slogan was created in an attempt to head off this criticism. By cannily associating (eliding) those two things, the College Board implies that it knows just what this whole education thing is really about, and that the new SAT reflects…well, all that good stuff.

Let us recall, though, that Common Core standards essentially had to consist of a series of empty formal skills slapped together and pushed through as quickly as possible in order to circumvent close investigation or pushback. Factual knowledge was an afterthought. It was never dealt with because it was politically inconvenient and could easily have led to the sort of controversy that would have deterred governors from signing on to the standards.

As a result, proponents of Common Core are left to assert that the knowledge element will somehow just take care of itself. Exactly how that is supposed to happen is never explained, but rest assured, it just will. That is how you get nonsensical articles like Natalie Wexler’s New York Times piece, “How Common Core Can Help in the Battle of Skills vs. Knowledge”. As it turns out, Wexler chairs the board of trustees at an organization called Writing Revolution… an organization that David Coleman just happens to sit on the board of. That’s quite a coincidence, is it not?

by Erica L. Meltzer | Nov 8, 2015 | Blog, Issues in Education, The New SAT

I’ve been doing some more pondering about the claims of increased equity attached to the new SAT, and although I’m still trying to sift through everything, I’ve at least managed to put my finger on something that’s been nagging at me.

Basically, the College Board is now espousing two contradictory views: on one hand, it trumpets things like the inclusion of “founding documents” in order to proclaim that the SAT will be “more aligned with what students are doing in school,” and on the other hand, it insists that no particular outside knowledge is necessary to do well on the reading portion of the exam.

While that assertion may contain a grain of truth – some very strong readers with just enough background knowledge will be able to able to navigate the test without excessive difficulty – it is also profoundly disingenuous. Comprehension can never be completely divorced from knowledge, and even middling readers students who have been fed a steady diet of “founding documents” in their APUSH classes will be at a significant advantage over even strong readers with no prior knowledge of those passages.

You really can’t have it both ways. If background knowledge truly isn’t important and the SAT is designed to be as close to a pure reading test as possible, then every effort should be made to use passages that the vast majority of students are unlikely to have already seen. (That perspective, incidentally, is the basis for the current SAT.)

On the flip side, a test that is truly intended to be aligned with schoolwork can only be fair if everyone taking the test is doing the same schoolwork – a virtual impossibility in the United States. That’s not a bug in the system, so to speak; it is the system. Even if Common Core had been welcomed with open arms, there would still be a staggering amount of variation.

To state what should be obvious, it is impossible to design an exam that is aligned with “what students are learning in school” when even students in neighboring towns – or even at two different schools in the same town – are doing completely different things. Does anyone sincerely think that students in public school in the South Bronx are doing the same thing as those at Exeter? Or, for that matter, that students in virtual charter schools in Mississippi are doing the same things as those in public school in Scarsdale? Yet all of these students will be taking the exact same test.

The original creators of the SAT were perfectly aware of how dramatically unequal American education was; they knew that poorer students would, on the whole, score below their better-off peers. Their primary goal was to identify the relatively small number of students from modest backgrounds who were capable of performing at a level comparable to students at top prep schools. Knowing that the former had not been exposed to the same quality of curriculum as the latter, they deliberately designed a test that was as independent as possible from any particular curriculum.

So when people complain that the SAT doesn’t reflect, or has somehow gotten away from, “what students are doing in school,” they are, in some cases very deliberately, missing the whole point – the test was never intended to be aligned with school in the first place. Given the American attachment to local control of education, that was not an irrational decision. (Although I somehow doubt they could have ever imagined the industry, not to mention the accompanying stress, that would eventually grow up around the exam.)

Viewed in this light, the attempt to create a school-aligned SAT can actually be seen as a step backwards – one that either dramatically overestimates the power of Common Core to standardize curricula, or that simply turns a blind eye to very substantial differences in the type of work that students are actually doing in school.

The problem is even more striking on the math side than on the verbal. As Jason Zimba, who led the Common Core math group, admitted, Common Core math is not intended to go beyond Algebra II, yet the SAT math section will now include questions dealing with trigonometry – a subject to which many juniors will not yet have been exposed. (For more about the problems with math on the new exam, see “The Revenge of K-12: How Common Core and the New SAT Lower College Standards in the U.S.” as well as “Testing Kids on Content They’ve Never Learned” by blogger Jonathan Pelto.) In that regard, the new SAT is even more misaligned with schoolwork than the reading. The current exam, in contrast, does not go beyond Algebra II – the focus is on material that pretty much everyone taking a standard college-prep program has covered.

The unfortunate reality is that disparities in test scores reflect larger educational disparities; it’s a lot easier to blame a test than to address underlying issues, for example the relationship between tax dollars and public school funding. Yes, the test plays a role in the larger system of inequality, but it is one factor, not the primary cause. Any nationally-administered exam, whatever it happens to be called, will to some extent reflect the gap (unless, of course, you start with the explicit goal of engineering a test on which everyone can do well and work backwards to create an exam that ensures that outcome).

Assuming, however, that the goal is not to design an exam that everyone aces, then there’s no obvious way out of the impasse. Create an exam that isn’t tied to any particular curriculum, and students are forced to take time away from school to prepare. Create an exam that’s “curriculum-based,” and you inevitably leave out huge numbers of students since there’s no such thing as a standard curriculum.

Damned if you do, damned if you don’t.

by Erica L. Meltzer | Oct 19, 2015 | Blog, Issues in Education

I stumbled across this little priceless little ditty left by SomeDAM Poet in the comments sections of this post on Diane Ravitch’s blog and felt compelled to repost it:

“Don’t drink the Colemanade”

Stop! Don’t drink the Colemanade!

The Coleman Core that Coleman made

What Coleman aided has culminated

In public schools calumniated

And to that I would add my own poetic two cents:

The Colemanade that Coleman made

Has percolated unallayed

In classrooms Coleman has created

His Standards march on, unabated

Now Coleman’s Core and Coleman’s aid

Do flow to schools now desiccated

The urgency must be conveyed

to clean the mess that Coleman made.

In all seriousness, though, anyone who’s even the least bit concerned about Common Core should read Diane’s post. And Lyndsey Layton’s article. And Mercedes Schneider’s book (both of them, actually).

I cannot overstate the importance of what these people have to say about public education in the United States right now.