by Erica L. Meltzer | Apr 1, 2016 | Blog, The New SAT

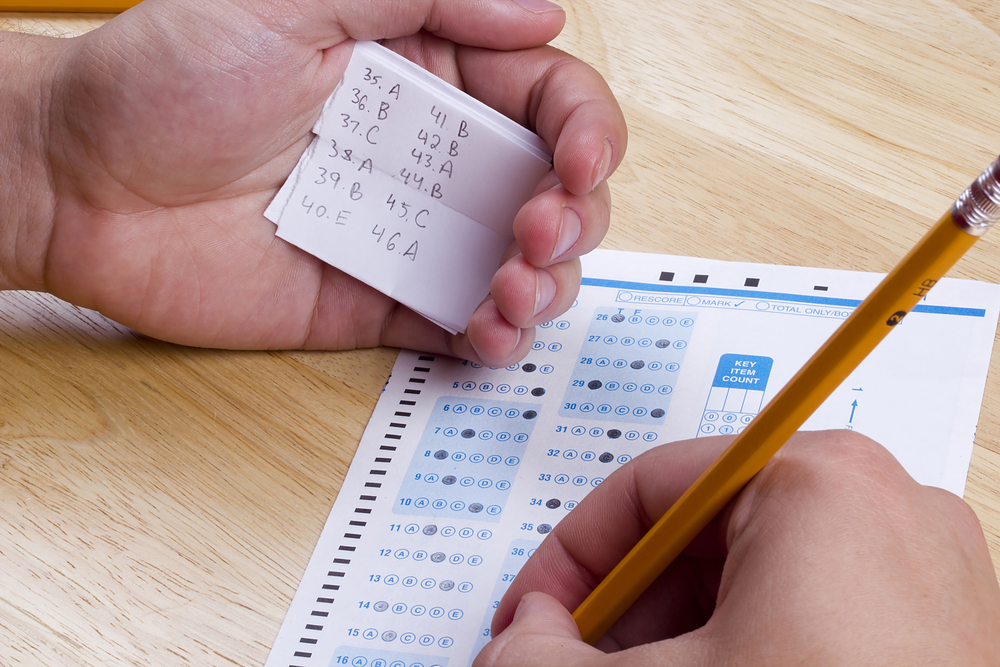

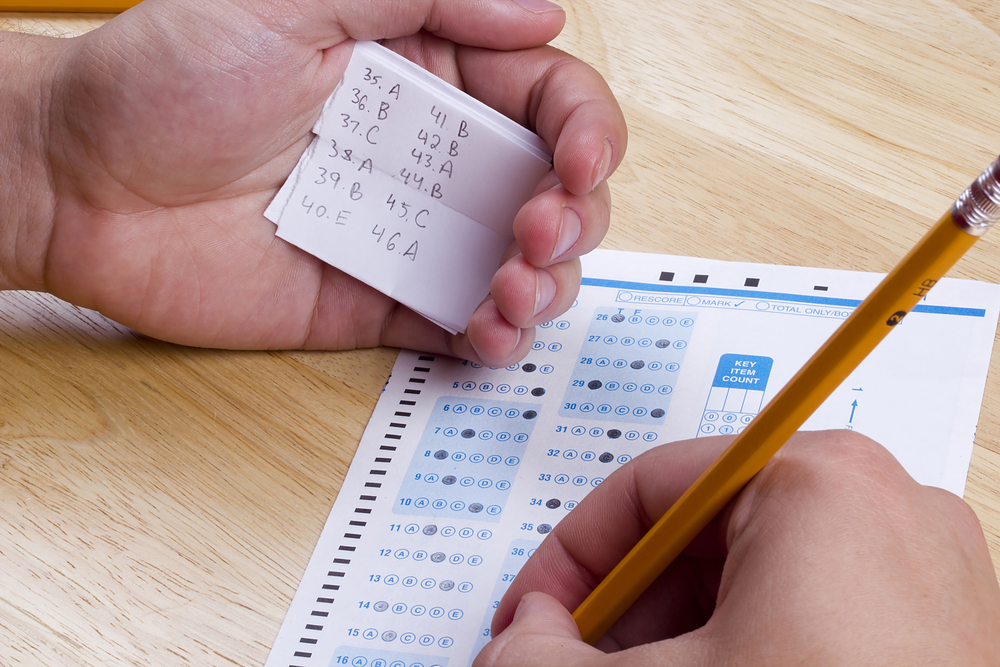

As predicted, the College Board’s decision to bar tutors from the first administration of the new SAT had little effect on the security of the test; questions from the March 5th administration of the new SAT quickly made an appearance on various Chinese websites as well as College Confidential.

Reuters has now broken a major story detailing the SAT “cartels” that have sprung up in Asia, as well as the College Board’s inconsistent and lackluster response to what is clearly a serious and widespread problem.

It’s a two-part series, and it clearly takes the College Board to task for allowing the breaches. (more…)

by Erica L. Meltzer | Mar 27, 2016 | Blog

As I’ve written about recently, the fact that the SAT has been altered from a predictive, college-aligned test to a test of skills associated with high school is one of the most overlooked changes in the discussion surrounding the new exam. Along with the elimination of ETS from the test-writing process, it signals just how radically the exam has been overhauled.

Although most discussions of the rSAT refer to the fact that the test is intended to be “more aligned with what students are doing in school,” the reality is that this alignment is an almost entirely new development rather than a “bringing back” of sorts. The implication — that the SAT was once intended to be aligned with a high school curriculum but has drifted away from that goal — is essentially false. (more…)

by Erica L. Meltzer | Mar 24, 2016 | Blog

When discussing the redesigned SAT, one common response to the College Board’s attempts to market the redesigned test to students and families by focusing on the ways in which it will mitigate stress and reduce the need for paid test-preparation, is to insist that that those factors are actually beside the point; that the College Board can market itself to students and families all it wants, but that the test is about colleges’ needs rather than students’ needs.

That’s certainly a valid point, but I think that underlying these comments is the assumption is that colleges are primarily interested in identifying the strongest students when making admissions decisions. If that were true, a test that didn’t make sufficient distinctions between high-scoring applicants wouldn’t be useful to them. But that belief is based on a misunderstanding of how the American college admissions system works. So in order to talk about how the new SAT fits into the admissions landscape, and why colleges might be so receptive to an exam that produces higher scores, it’s helpful to start with a detour. (more…)

by Erica L. Meltzer | Mar 22, 2016 | Blog, The New SAT

Apparently I’m not the only one who has noticed something very odd about PSAT score reports. California-based Compass Education has produced a report analyzing some of the inconsistencies in this year’s scores.

The report raises more questions than it answers, but the findings themselves are very interesting. For anyone who has the time and the inclination, it’s well worth reading.

Some of the highlights include:

- Test-takers are compared to students who didn’t even take the test and may never take the test.

- In calculating percentiles, the College Board relied on an undisclosed sample method when it could have relied on scores from students who actually took the exam.

- 3% of students scored in the 99th percentile.

- In some parts of the scale, scores were raised as much as 10 percentage points between 2014 and 2015.

- More sophomores than juniors obtained top scores.

- Reading/writing benchmarks for both sophomores and juniors have been lowered by over 100 points; at the same time, the elimination of the wrong-answer penalty would permit a student to approach the benchmark while guessing randomly on every single question.

by Erica L. Meltzer | Mar 20, 2016 | Blog, The New SAT

Following the first administration of the new SAT, the College Board released a highly unscientific survey comparing 8,089 March 2016 test-takers to 6494 March 2015 test-takers.

You can read the whole thing here, but in case you don’t care to, here are some highlights:

- 75% of students said the Reading Test was the same as or easier than they expected.

- 80% of students said the vocabulary on the test would be useful to them later in life, compared with 55% in March 2015.

- 59% of students said the Math section tests the skills and knowledge needed for success in college and career.

Leaving aside the absence of some basic pieces of background information that would allow a reader to evaluate just how seriously to take this report (why were different numbers of test-takers surveyed in 2015 vs. 2016? who exactly were these students? how were they chosen for the survey? what were their socio-economic backgrounds? what sorts of high schools did they attend, and what sorts of classes did they take? what sorts of colleges did they intend to apply to? were the two groups demographically comparable? etc., etc.), this is quite a remarkable set of statements. (more…)