by Erica L. Meltzer | Jan 17, 2016 | Blog, The New SAT

A couple of posts back, I wrote about a recent Washington Post article in which a tutor named Ned Johnson pointed out that the College Board might be giving students an exaggeratedly rosy picture of their performance on the PSAT by creating two score percentiles: a “user” percentile based on the group of students who actually took the test; and a “national percentile” based on how the student would rank if every 11th (or 10th) grader in the United States took the test — a percentile almost guaranteed to be higher than the national percentile.

When I read Johnson’s analysis, I assumed that both percentiles would be listed on the score report. But actually, there’s an additional layer of distortion not mentioned in the article.

I stumbled on it quite by accident. I’d seen a PDF-form PSAT score report, and although I only recalled seeing one set of percentiles listed, I assumed that the other set must be on the report somewhere and that I simply hadn’t noticed them.

A few days ago, however, a longtime reader of this blog was kind enough to offer me access to her son’s PSAT so that I could see the actual test. Since it hasn’t been released in booklet form, the easiest way to give me access was simply to let me log in to her son’s account (it’s amazing what strangers trust me with!).

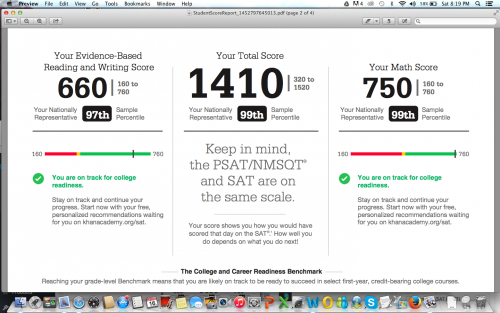

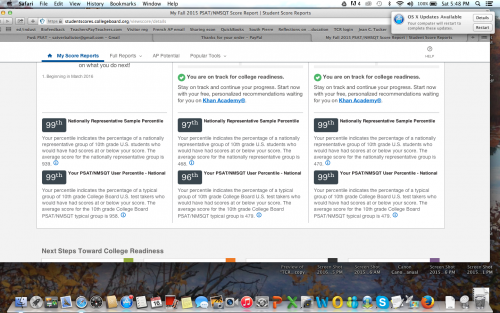

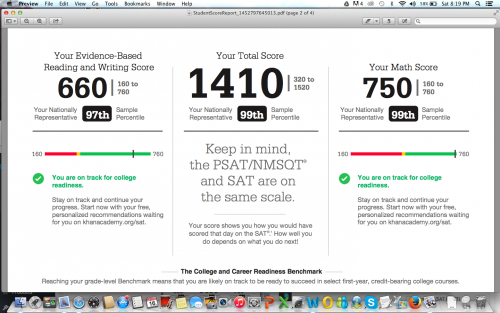

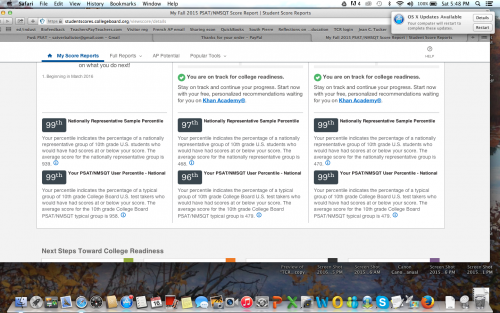

When I logged in, I did in fact see the two sets of percentiles, with the national, higher percentile of course listed first. But then I noticed the “download report” button, and something occurred to me. The earlier PDF report I’d seen absolutely did not present the two sets of percentiles as clearly as the online report did — of that I was positive.

So I downloaded a report, and sure enough, only the national percentiles were listed. The user percentile — the ranking based on the group students who actually took the test — was completely absent. I looked over every inch of that report, as well as the earlier report I’d seen, and I could not find the user percentile anywhere.

Unfortunately (well, fortunately for him, unfortunately for me), the student in question had scored extremely well, so the discrepancy between the two percentiles was barely noticeable. For a student with a score 200 points lower, the gap would be more pronounced. Nevertheless, I’m posting the two images here (with permission) to illustrate the difference in how the percentiles are reported on the different reports.

Somehow I didn’t think the College Board would be quite so brazen in its attempt to mislead students, but apparently I underestimated how dirty they’re willing to play. Giving two percentiles is one thing, but omitting the lower one entirely from the report format that most people will actually pay attention to is really a new low.

I’ve been hearing tutors comment that they’ve never seen so many students obtain reading scores in the 99th percentile, which apparently extends all the way down to 680/760 for the national percentile, and 700/760 for the user percentile. Well…that’s what happens when a curve is designed to inflate scores. But hey, if it makes students and their parents happy, and boosts market share, that’s all that counts, right? Shareholders must be appeased.

Incidentally, the “college readiness” benchmark for 11th grade reading is now set at 390. 390. In contrast, the I confess: I tried to figure out what that corresponds to on the old test, but looking at the concordance chart gave me such a headache that I gave up. (If anyone wants to explain it me, you’re welcome to do so.) At any rate, it’s still shockingly low — the benchmark on the old test was 550 — as well as a whopping 110 points lower than the math benchmark. There’s also an “approaching readiness” category, which further extends the wiggle room.

A few months back, before any of this had been released, I wrote that the College Board would create a curve to support the desired narrative. If the primary goal was to pave the way for a further set of reforms, then scores would fall; if the primary goal was to recapture market share, then scores would rise. I guess it’s clear now which way they decided to go.

by Erica L. Meltzer | Jan 10, 2016 | Blog, The New SAT

Apparently I’m not the only one who thinks the College Board might be trying to pull some sort of sleight-of-hand with scores for the new test.

In this Washington Post article about the (extremely delayed) release of 2015 PSAT scores, Ned Johnson of PrepMatters writes:

Here’s the most interesting point: College Board seems to be inflating the percentiles. Perhaps not technically changing the percentiles but effectively presenting a rosier picture by an interesting change to score reports. From the College Board website, there is this explanation about percentiles:

Percentiles

A percentile is a number between 0 and 100 that shows how you rank compared to other students. It represents the percentage of students in a particular grade whose scores fall at or below your score. For example, a 10th-grade student whose math percentile is 57 scored higher or equal to 57 percent of 10th-graders.

You’ll see two percentiles:

The Nationally Representative Sample percentile shows how your score compares to the scores of all U.S. students in a particular grade, including those who don’t typically take the test.

The User Percentile — Nation shows how your score compares to the scores of only some U.S. students in a particular grade, a group limited to students who typically take the test.

What does that mean? Nationally Representative Sample percentile is how you would stack up if every student took the test. So, your score is likely to be higher on the scale of Nationally Representative Sample percentile than actual User Percentile.

On the PSAT score reports, College Board uses the (seemingly inflated) Nationally Representative score, which, again, bakes in scores of students who DID NOT ACTUALLY TAKE THE TEST but, had they been included, would have presumably scored lower. The old PSAT gave percentiles of only the students who actually took the test.

For example, I just got a score from a junior; 1250 is reported 94th percentile as Nationally Representative Sample percentile. Using the College Board concordance table, her 1250 would be a selection index of 181 or 182 on last year’s PSAT. In 2014, a selection index of 182 was 89th percentile. In 2013, it was 88th percentile. It sure looks to me that College Board is trying to flatter students. Why might that be? They like them? Worried about their feeling good about the test? Maybe. Might it be a clever statistical sleight of hand to make taking the SAT seem like a better idea than taking the ACT? Nah, that’d be going too far.

I’m assuming that last sentence is intended to be taken ironically.

One quibble. Later in the article, Johnson also writes that “If the PSAT percentiles are in fact “enhanced,” they may not be perfect predictors of SAT success, so take a practice SAT.” But if PSAT percentiles are “enhanced,” who is to say that SAT percentiles won’t be “enhanced” as well?

Based on the revisions to the AP exams, the College Board’s formula seems to go something like this:

(1) take a well-constructed, reasonably valid test, one for which years of data collection exists, and declare that it is no longer relevant to the needs of 21st century students.

(2) Replace existing test with a more “holistic,” seemingly more rigorous exam, for which the vast majority of students will be inadequately prepared.

(3) Create a curve for the new exam that artificially inflates scores.

(4) Proclaim students “college ready” when they may be still lacking fundamental skills.

(5) Find another exam, and repeat the process.

by Erica L. Meltzer | Dec 29, 2015 | Blog, The New SAT

Among the partial truths disseminated by the College Board, the phrase “guessing penalty” ranks way up there on the list of things that irk me most. In fact, I’d say it’s probably #2, after the whole “obscure vocabulary” thing.

Actually, calling it a partial truth is generous. It’s actually more of a distortion, an obfuscation, a misnomer, or, to use a “relevant” word, a lie.

Let’s deconstruct it a bit, shall we?

It is of course true that the current SAT subtracts an additional ¼ point for each incorrect answer. While this state of affairs is a perennial irritant to test-takers, not to mention a contributing factor to the test’s reputation for “trickiness,” it nevertheless serves a very important purpose – namely, it functions as a corrective to prevent students from earning too many points from lucky guessing and thus from achieving scores that seriously misrepresent what they actually know. (more…)

by Erica L. Meltzer | Dec 27, 2015 | Blog, The New SAT

In a Washington Post article describing the College Board’s attempt to capture market share back from the ACT, Nick Anderson writes:

Wider access to markets where the SAT now has a minimal presence would heighten the impact of the revisions to the test that aim to make it more accessible. The new version [of the SAT], debuting on March 5, will eliminate penalties for guessing, make its essay component optional and jettison much of the fancy vocabulary, known as “SAT words,” that led generations of students to prepare for test day with piles of flash cards.

Nick Anderson might be surprised to discover that “jettison” is precisely the sort of “fancy” word that the SAT tests.

But then again, that would require him to do research, and no education journalist would bother to do any of that when it comes to the SAT. Because, like, everyone just knows that the SAT only tests words that no one actually uses. (more…)

by Erica L. Meltzer | Dec 26, 2015 | Blog, SAT Essay, The New SAT

For the last part in this series, I want to consider the College Board’s claim that the redesigned SAT essay is representative of the type of assignments students will do in college.

Let’s start by considering the sorts of passages that students are asked to analyze.

As I previously discussed, the redesigned SAT essay is based on the rhetorical essay from the AP English Language and Composition (AP Comp) exam. While they comprise a wide range of themes, styles, and periods, the passages chosen for that test are usually selected because they are exceptionally interesting from a rhetorical standpoint. Even if the works they are excerpted from would most likely be studied in their social/historical context in an actual college class, it makes sense to study them from a strictly rhetorical angle as well. Different types of reading can be appropriate for different situation, and this type of reading in this particular context is well justified.

In contrast, the texts chosen for analysis on the new SAT essay are essentially the type of humanities and social science passages that routinely appear on the current SAT – serious, moderately challenging contemporary pieces intended for an educated general adult audience. To be sure, this type of writing is not completely straightforward: ideas and points of views are often presented in a manner that is subtler than what most high school readers are accustomed to, and authors are likely to make use of the “they say/I say” model, dialoguing with and responding to other people’s ideas. Most students will in fact do a substantial amount of this type of reading in college.

By most academic standards, however, these types of passages would not be considered rhetorical models. It is possible to analyze them rhetorically – it is possible to analyze pretty much anything rhetorically – but a more relevant question is why anyone would want to analyze them rhetorically. Simply put, there usually isn’t all that much to say. As a result, it’s entirely unsurprising that students will resort to flowery, overblown descriptions that are at odds with actual moderate tone and content of the passages. In fact, that will often be the only way that students can produce an essay that is sufficiently lengthy to receive a top score.

There are, however, a couple of even more serious issues.

First, although the SAT essay technically involves an analysis, it is primarily a descriptive essay in the sense that students are not expected to engage with either the ideas in the text or offer up any ideas of their own. With exceedingly few exceptions, however, the writing that students are asked to do in college with be thesis-driven in the traditional sense – that is, students will be required to formulate their own original arguments, which they then support with various pieces of specific evidence (facts, statistics, anecdotes, etc.) Although they may be expected to take other people’s ideas into account and “dialogue” with them, they will generally be asked to do so as a launching pad for their own ideas. They may on occasion find it necessary to discuss how a particular author presents his or her evidence in order to consider a particular nuance or implication, but almost never will they spend an entire assignment focusing exclusively on the manner in which someone else presents an argument. So although the skills tested on the SAT essay may in some cases be a useful component of college work, the essay itself has virtually nothing to do with the type of assignments students will actually be expected to complete in college.

By the way, for anyone who wants understand the sort of work that students will genuinely be expected to do in college, I cannot recommend Gerald Graff and Cathy Birkenstein’s They Say/I Say strongly enough. This is a book written by actual freshman composition instructors with decades of experience. Suffice it to say that it doesn’t have much to do with what the test-writers at the College Board imagine that college assignments look like.

Now for the second point: the “evidence” problem.

As I’ve mentioned before, the SAT essay prompt does not explicitly ask students to provide a rhetorical analysis; rather, it asks them to consider how the writer uses “evidence” to build his or her argument. That sounds like a reasonable task on the surface, but it falls apart pretty quickly once you start to consider its implications.

When students do the type of reading that the SAT essay tests in college, it will pretty much always be in the context of a particular subject (sociology, anthropology, economics, etc.). By definition, non-fiction is both dependent on and engaged with the world outside the text. There is no way to analyze that type of writing meaningfully or effectively without taking that context into account. Any linguistic or rhetorical analysis would always be informed by a host of other, external factors that pretty much any professor would expect a student to discuss. There is a reason that “close reading” is normally associated with fiction and poetry, whose meanings are far less dependent on outside factors. Any assignment that asks students to analyze a non-fiction author’s use of evidence without considering the surrounding context is therefore seriously misrepresenting what it means to use evidence in the real world.

In college and in the working world, the primary focus is never just on how evidence is presented, but rather how valid that evidence is. You cannot simply present any old facts that happen to be consistent with the claim you are making – those facts must actually be true, and any competent analysis must take that factor into account. The fact that professors and employers complain that students/employees have difficulty using evidence does not mean that the problem can be solved just by turning “evidence” into a formal skill. Rather, I would argue that the difficulties students and employees have in using evidence effectively is actually a symptom of a deeper problem, namely a lack of knowledge and perhaps a lack of exposure to (or an unwillingness to consider) a variety of perspectives.

If you are writing a Sociology paper, for example, you cannot simply state that the author of a particular study used statistics to support her conclusion, or worse, claim that an author’s position is “convincing” or “effective,” or that it constitutes a “rich analysis” because the author uses lots of statistics as evidence. Rather, you are responsible for evaluating the conditions under which those statistics were gathered; for understanding the characteristics of the groups used to obtain those statistics; and for determining what factors may not have been taken into account in the gathering of those statistics. You are also expected to draw on socio-cultural, demographic, and economic information about the population being studied, about previous studies in which that population was involved, and about the conclusions drawn from those studies.

I could go on like this for a while, but I think you probably get the picture.

As I discussed in my last post, some of the sample essays posted by the College Board show a default position commonly adopted by many students who aren’t fully sure how to navigate the type of analysis the new SAT essay requires – something I called “praising the author.” Because the SAT is such an important test, they assume that any author whose work appears on it must be a pretty big. As a result, they figure that they can score some easy points by cranking up the flattery. Thus, authors are described as “brilliant” and “passionate” and “renowned,” even if they are none of those things.

As a result, the entire point of the assignment is lost. Ideally, the goal of close reading is to understand how an author’s argument works as precisely as possible in order to formulate a cogent and well-reasoned response. The goal is to comprehend, not to judge or praise. Otherwise, the writer risks setting up straw men and arguing in relation to positions that they author does not actually take.

The sample essay scoring, however, implies something different and potentially quite problematic. When students are rewarded for offering up unfounded praise and judgments, they can easily acquire the illusion that they are genuinely qualified to evaluate professional writers and scholars, even if their own composition skills are at best middling and they lack any substantial knowledge about a subject. As a result, they can end up confused about what academic writing entails, and about what is and is not appropriate/conventional (which again brings us back to They Say/I Say).

These are not theoretical concerns for me; I have actually tutored college students who used these techniques in their writing.

My guess is that a fair number of colleges will recognize just how problematic an assignment the new essay is and deem it optional. But that in turn creates an even larger problem. Colleges cannot very well go essay-optional on the SAT and not the ACT. So what will happen, I suspect, is that many colleges that currently require the ACT with Writing will drop that requirement as well – and that means highly selective colleges will be considering applications without a single example of a student’s authentic, unedited writing. Bill Fitzsimmons at Harvard came out so early and so strongly in favor of the SAT redesign that it would likely be too much of an embarrassment to renege later, and Princeton, Yale, and Stanford will presumably continue to go along with whatever Harvard does. Aside from those four schools, however, all bets are probably off.

If that shift does in fact occur, then no longer will schools be able to flag applicants whose standardized-test essays are strikingly different from their personal essays. There will be even less of a way to tell what is the result of a stubborn 17 year-old locking herself in her room and refusing to show her essays to anyone, and what is the work of a parent or an English teacher… or a $500/hr. consultant.